This article explores the ongoing interaction between human intelligence and artificial intelligence (AI), with a particular focus on the concept of algorithmic empathy – a type of simulated affective response with tangible educational value – and on the dynamics of digital co-creation. Drawing on interdisciplinary perspectives and qualitative data collected through semi-structured interviews with 57 teachers and 542 students from Romania, the study explores how AI systems can support learning, foster innovation, and adapt to users’ cognitive and emotional needs thematically analysed to provide in-depth insights. The analysis identifies key factors that shape effective human-AI communication, such as digital literacy, trust, personalization, and ethical awareness. While AI is perceived as a valuable tool for enhancing educational processes and decision-making, challenges related to transparency, over-reliance, and reduced human connection are also highlighted. The findings show that meaningful human-AI collaboration requires not only technological refinement but also a critical and ethical rethinking of the roles both actors play in a shared digital ecosystem. The study underscores the importance of algorithmic empathy and conversational digital literacy in sustaining user motivation, while warning against dependency and the erosion of critical inquiry. In educational settings, AI is best positioned as a complement to, not a substitute for, the human educator. These insights reinforce the need for human-centred, ethically grounded AI integration strategies, especially in environments where learning, equity, and emotional support intersect.

____________________

Journal of Digital Pedagogy – ISSN 3008 – 2021

2025, Vol. 4, No. 1, pp. 56-69

https://doi.org/10.61071/JDP.2540

HTML | PDF

____________________

1. Theoretical Background and Study Purpose

In the age of “free intelligence,” artificial intelligence (AI) has become a key issue of contemporary society, influencing a wide range of everyday life aspects-from healthcare and education to business and entertainment. Effective communication between AI and human users is essential to the proper functioning of these systems. This communication involves transferring information in a way that is easily understandable for humans and adaptable for AI (Floridi et al., 2018). As AI continues to grow more sophisticated, the importance of this interaction also increases, especially as society becomes increasingly dependent on such technologies. The main goal of this article is to examine the factors that enable efficient communication through a multidisciplinary lens, while also addressing the related challenges and emerging opportunities.

This study explores the evolving relationship between artificial intelligence (AI) and human cognition, highlighting how these two types of intelligence can collaborate to boost efficiency and drive innovation. It delves into the various elements that shape human-AI communication, particularly focusing on both ethical challenges and technological developments. The discussion also covers how different industries are being transformed by this interaction and considers future growth opportunities. A key insight from the analysis is that true innovation depends on balancing technical advancements with the development of human capabilities. Encouraging this balance helps us tackle complex problems more effectively. The research also points to the importance of upholding ethical principles to address the risks that could arise from widespread AI implementation (Russell & Norvig, 2021). Over the years, the human-machine relationship has evolved from the simple use of technological tools to a complex interaction involving artificial intelligence (Bostrom, 2014). Thus, communication between AI and human intelligence is crucial for building an effective partnership. This article explores these aspects, emphasizing the importance of developing AI systems that can adequately interpret and respond to users’ needs.

A key element in understanding human-AI communication is the socio-cultural context in which this interaction takes place. Natural language, social norms, empathy, and the ability to interpret intent are core features of human communication, yet integrating these into AI systems remains a major challenge (Dignum, 2019). Therefore, studying how to facilitate effective communication between humans and AI calls for an interdisciplinary and transdisciplinary approach, drawing from cognitive science, linguistics, psychology, ethics, and computer science.

Furthermore, the motivation to explore this topic stems from the urgent need to create a digital education system that aligns with today’s realities. In a world increasingly shaped by algorithms, digital literacy is no longer optional – it is a fundamental skill. Effective communication with AI requires not only technical knowledge, but also the ability to critically assess information, understand automated decisions, and engage with technology in an ethical and responsible way (Luckin et al., 2016).

The exponential increase in the use of AI in education – through adaptive platforms, conversational agents, and automated assessment systems – raises important questions about the identity and autonomy of both students and teachers. Within this context, the present study aims to establish a solid theoretical framework for understanding and improving human – AI communication, with the broader goal of contributing to the development of sustainable, human-centred educational practices.

The emergence of generative artificial intelligence, such as language models like ChatGPT, has fundamentally transformed the dynamics of digital interaction. These tools no longer serve merely as search engines or data processors, but as entities capable of sustaining coherent dialogue, offering feedback, and even supporting learning and reflection (Zawacki-Richter et al., 2019). For this reason, understanding how AI systems interpret user intent, emotions, and knowledge levels is becoming increasingly essential.

Furthermore, this digital transformation is accelerating the transition from a traditional, transmission-based pedagogy to one that is collaborative and exploratory, where technology becomes an active participant in the educational process. Communication between the user and AI gains formative significance, as such interaction encourages critical thinking, creativity, and self-directed learning. Nevertheless, there is a concern that over-reliance on automated responses could lead to cognitive passivity and a reduction in authentic human interaction (Selwyn, 2019).

Daugherty and Wilson (2018), in their work on collaboration between humans and machines, emphasize that artificial intelligence should not be seen as a replacement for human effort, but rather as a tool to enhance it. Within the educational setting, this viewpoint aligns with the idea of AI-supported teaching, where the role of the teacher remains essential. AI, in this case, serves to personalize and simplify learning experiences, making the process more efficient without removing the human element.

The rationale for this study is also supported by the global context shaped by the COVID-19 pandemic, which has accelerated the adoption of digital technologies in education while simultaneously exposing deep-seated inequalities in access, gaps in technological literacy, and ethical challenges related to the mass use of AI. Thus, the relevance of these systems lies not only in their technical capabilities, but also in how they are perceived, accepted, and applied by teachers and students in real-world learning environments (Van den Berghe et al., 2021).

The article draws attention to the importance of focusing on human experience, especially in the context of how teachers engage with artificial intelligence. It argues that successful communication with AI isn’t just about how the system performs technically – equally important are the cultural, social, and educational factors that shape how it’s used. Instead of jumping immediately to trusting AI, the study suggests that we should first foster open, respectful conversations grounded in shared values, ethical integrity, and mutual understanding.

1.1. Communication channels

Artificial intelligence interacts with people through several communication channels:

- Text-based interfaces: One common method is through text-based platforms, such as chatbots and virtual assistants. These tools interpret what users type and respond accordingly. They use natural language processing (NLP) to mimic human conversation. Programs like ChatGPT are notable examples, continuously refining their interactions based on user feedback (Floridi et al., 2018).

- Voice-based interfaces: Systems like Alexa and Siri use voice recognition combined with NLP to understand spoken commands and perform tasks such as playing music or controlling smart home devices. Their ongoing development aims to enhance the naturalness and fluidity of voice interactions (Russell & Norvig, 2021).

- Visual interfaces: Tools such as dashboards, interactive visualizations, and augmented reality applications are widely used in education, creative industries, and complex data analysis. Augmented reality, for instance, enables users to overlay digital information onto the physical world, supporting faster and more intuitive decision-making (Bostrom, 2014).

- Embodied AI in physical robots: Robots equipped with AI capable of emotional and physical interaction are increasingly integrated into fields such as education, healthcare, and public administration (Floridi et al., 2018).

- Collaborative platforms: These tools aim to support meaningful collaboration between people and AI, particularly in situations that require teamwork, detailed coordination, or the handling of large amounts of data (Russell & Norvig, 2021).

1.2. Challenges in communication

- Lack of empathy: AI lacks the ability to fully grasp human emotions in the way a human conversational partner can. For example, a chatbot may detect certain emotional cues, but its interpretation remains limited by predefined data sets. This shortcoming can lead to impersonal or inappropriate interactions (Floridi et al., 2018).

- Language barriers: The complexity of natural language – including ambiguity, figurative expressions, and metaphor – can be difficult for AI to interpret. Challenges in understanding dialects, domain-specific jargon, or culturally embedded phrases can hinder accessibility and broader adoption (Russell & Norvig, 2021).

- Ethical and privacy concerns: The use of personal data in AI systems raises critical issues regarding data protection and information security. Security breaches may result in loss of user trust and serious legal implications (Bostrom, 2014).

- Technological limitations: Certain AI systems struggle with processing complex or contextually nuanced queries. In such cases, human intervention is often required to avoid errors or misinterpretations (Floridi et al., 2018).

- Algorithmic bias: AI algorithms may reflect the biases found in their training data. This can lead to unfair or discriminatory outcomes, ultimately undermining both the reliability and societal acceptance of AI technologies (Russell & Norvig, 2021).

1.3. Development opportunities

- Artificial empathy enhancement: The integration of algorithms capable of detecting and responding to human emotions. This includes recognizing facial expressions, analyzing vocal tone, and adapting responses according to the user’s emotional context (Bostrom, 2014).

- Advanced personalization: Customizing communication based on individual user needs and preferences. For example, AI-powered educational platforms can adjust content to align with each learner’s style and pace (Russell & Norvig, 2021).

- Real-time collaboration: Employing AI as a decision-making partner in complex scenarios by providing data-informed support. This is especially valuable in fields like medicine, where AI can process vast amounts of information rapidly to offer precise solutions (Floridi et al., 2018).

- Bias reduction: Advancing machine learning techniques to limit the influence of biases embedded in training datasets, promoting more equitable AI outcomes (Russell & Norvig, 2021).

- Multimodal interface development: Combining text, visuals, audio, and virtual reality to create more intuitive, immersive, and dynamic interaction experiences (Bostrom, 2014).

1.4. Real-world uses of artificial intelligence

- Education: Modern learning platforms powered by AI are increasingly used to personalize instruction. These tools can pinpoint where a student is struggling and offer tailored resources to help them progress (Floridi et al., 2018).

- Healthcare: AI is transforming medical diagnostics and patient care. Intelligent systems can now interpret medical images to catch signs of illness early on (Russell & Norvig, 2021).

- Business: Companies are leveraging AI to enhance strategic planning. Through data analysis and automation, these systems help forecast financial patterns, manage risks, and uncover potential growth areas (Floridi et al., 2018).

- Public Services: Government institutions apply AI to make their internal processes more efficient, reduce red tape, and provide citizens with better access to information (Bostrom, 2014).

- Cybersecurity: AI-driven technologies play a crucial role in identifying and reacting to cyber threats instantly. These systems analyze vast amounts of data to spot and address security issues as they happen (Russell & Norvig, 2021).

2. Methodology

The article adopts an interdisciplinary perspective, combining insights from fields such as computer science, psychology, linguistics, and ethics. The chosen methodology involves a systematic review of the academic literature, followed by a qualitative investigation of real-world cases and practical applications (Floridi et al., 2018), which offer relevant information on the challenges and opportunities in this field.

The qualitative data of the study include semi-structured interviews with a total of 57 teachers and 542 students across multiple Romanian schools. This focused thematic analysis was conducted on this sample of teachers and students to ensure deeper insight into their recurring patterns and perceptions.

A further methodological key aspect is the comparative analysis of various existing AI technologies, aimed at identifying their strengths and weaknesses. This approach helped to build a clear overview of the current state of the field and potential future directions for research, with a focus on user impact and communication effectiveness (Russell & Norvig, 2021).

To explore how effective communication occurs between human users and artificial intelligence systems in educational settings, the study employs a qualitative, exploratory methodology. This approach allows for a deeper understanding of user perceptions, behaviours, and motivations, going beyond the quantitative aspects of interaction.

2.1. Research objectives

The primary goals of this methodological endeavour are:

- To identify the factors that influence the quality of communication between humans and AI in educational contexts;

- To analyse how human users (teachers and students) perceive this type of interaction;

- To explore the role of algorithmic empathy and artificial emotional intelligence in facilitating effective communication;

- To assess the potential of AI in supporting student-centred learning.

2.2. Research method

The study is grounded in thematic analysis of data gathered through semi-structured interviews with a sample of 57 teachers and 542 middle and high school students from both urban and rural educational institutions. The interviews aimed to capture subjective yet valuable insights into real-world experiences with AI technologies (e.g., ChatGPT, Google Bard, adaptive platforms) in educational settings.

Criteria for participant inclusion included active involvement in secondary or high school education, willingness to participate, and prior exposure to AI-based platforms in an educational context. Participants who lacked basic digital literacy or had no prior contact with AI technologies were excluded. A purposeful sampling strategy was used to ensure diversity in terms of teaching disciplines, geographical location, digital competence, and school environment, thus aiming to reduce selection bias and enhance the representativeness of the findings.

2.3. Rationale for the qualitative approach

A qualitative approach was chosen because it offers an interpretive and flexible framework – crucial in an emerging field like human-AI communication combining a comprehensive literature review with semi-structured interviews conducted with 57 teachers and 542 students. Participants were purposefully selected from a wide range of school environments, spanning both urban and rural areas across several counties. The sample encompassed individuals from lower secondary (grades VII-VIII) and upper secondary (grades IX-XII) education. The teacher cohort included professionals from diverse disciplines – such as S.T.E.M., humanities, and the arts – with varying levels of familiarity and practical experience in using AI-based educational tools. Interaction with intelligent systems involves nuanced contextual factors – such as trust, cognitive style, digital competence, or intrinsic motivation – that cannot be easily quantified. Participant discourse analysis provides meaningful insights into the adaptive and personalized nature of AI interaction, as well as the practical challenges users may face.

The student group was similarly diverse, reflecting a broad spectrum of academic orientations, socio-cultural backgrounds, and levels of digital literacy. Care was taken to include learners from institutions with and without prior involvement in AI integration initiatives, in order to maximize contextual diversity and reduce institutional bias.

Each interview, lasting between 30 and 45 minutes, followed a structured protocol covering four main areas: (a) firsthand experiences with AI platforms, (b) perceived advantages and potential drawbacks, (c) emotional responses and levels of trust in AI-generated content, and (d) future expectations regarding AI integration in education. To illustrate, participants were asked open-ended questions such as: “How do you typically use AI platforms in your learning/teaching?”, “Can you describe a positive or negative experience when using AI tools in the classroom?”, “Do you feel AI systems understand your questions and needs?”, and “How do you think AI might change your role as a teacher/student in the future?” Sample questions included: “How do you typically use AI platforms in your learning/teaching?”, “Have you ever felt supported or misunderstood by an AI response?”, and “What benefits or risks do you associate with AI in the classroom?”

Data were analysed thematically, drawing on the six-phase approach developed by Braun and Clarke (2006). Coding was conducted independently by two researchers with 84% inter-rater agreement. The two researchers initially coded transcripts separately, identifying semantic and latent themes. Discrepancies in code interpretation or theme categorization were resolved through iterative discussions and consensus meetings. An audit trail of coding decisions was maintained throughout the process to ensure transparency and consistency in analysis. For example, in one case, the initial code ‘student comfort’ was assigned by the first researcher to excerpts describing AI’s polite tone. The second researcher coded the same excerpts as ‘perceived empathy.’ Following a consensus meeting, both agreed to merge the excerpts under a refined theme called ‘algorithmic empathy,’ and this decision was logged in a shared coding spreadsheet, annotated with justifications and timestamps. Discrepancies were resolved through discussion and consensus, ensuring While the significantly expanded sample size lends greater depth and credibility to the findings, certain limitations remain – notably the potential effects of self-selection and the limited representation of non-formal educational contexts.

This was assessed during the coding phase, as the last set of interview transcripts did not yield additional codes or insights beyond those already identified. The stability of thematic patterns across participants, regardless of background or context, further supported the conclusion that data saturation had been reached. This reinforces the reliability of the findings and ensures that the analysis captured the full range of perspectives relevant to the study objectives.

Triangulation was employed through cross-validation between the thematic codes of both researchers and comparison with insights from the literature review, enhancing the validity and robustness of the qualitative findings. In addition to methodological triangulation (via independent coding and thematic agreement), theoretical triangulation was applied by juxtaposing participant responses with established frameworks in digital pedagogy and AI ethics. For example, student references to personalized feedback were interpreted in light of self-determination theory (Ryan & Deci, 2000), while concerns about overreliance were discussed through the lens of heutagogy (Blaschke, 2012). Furthermore, data source triangulation was ensured by collecting perspectives from both students and teachers across varied educational contexts. This multi-level triangulation increases the credibility, coherence, and transferability of the study’s findings. Analyst triangulation also contributed to the robustness of interpretations: both researchers brought complementary disciplinary perspectives – education and digital ethics – which enhanced thematic refinement. The convergence of themes across independently coded data and alignment with existing literature not only validated emergent patterns but also provided nuanced insight into divergences between stakeholder groups. Finally, environmental triangulation was partially addressed by including voices from urban and rural institutions, offering contextual depth and illustrating how institutional setting may mediate AI integration and perception.

2.4. Ethical considerations

The research complied with all principles of academic ethics: participation was voluntary, the data were anonymized, and informed consent was obtained prior to conducting the interviews. Additionally, special attention was paid to the protection of personal data, in line with GDPR regulations.

2.5. Results and interpretation

The results derived from the interview analysis were thematically grouped to ensure alignment with the research objectives. The data highlight several recurring trends and shared perceptions regarding the interaction between users and AI systems in educational settings.

2.5.1 Overall perception of AI in education

Most participants expressed a favourable view of AI, seeing it as an innovative, accessible, and motivating tool. Teachers emphasized AI’s potential to provide instant feedback, encourage personalized learning, and reduce time spent on grading and formative assessment. Students particularly appreciated the interactive and “human-like” nature of the experience, especially when using conversational models such as ChatGPT: “Sometimes it feels like you’re talking to a super-smart classmate who never runs out of patience.” (Student, age 15) However, there were also critical perspectives, especially from teachers, who pointed to the risk of overreliance on automated responses and a diminishing capacity for critical thinking: “Students no longer want to understand – they just want the perfect answer, instantly, with no effort.” (Romanian teacher, urban school)

2.5.2 Factors that influence effective human-AI communication

Several key factors were identified that facilitate smoother interaction with AI systems:

- Digital literacy level: Students with stronger tech skills were better able to ask clear questions and interpret AI-generated responses more effectively.

- Clarity in natural language use: Coherent and well-structured input resulted in more accurate and helpful answers, suggesting a strong link between linguistic skills and interaction quality.

- Educational environment: In schools where technology is systematically integrated into teaching, educators tended to demonstrate greater openness and trust in AI.

2.5.3 Algorithmic empathy – perception and limitations

An interesting aspect that emerged was the perception of “empathy” in AI systems. While participants acknowledged the artificial nature of these interactions, many highlighted the polite, calm, and encouraging tone of the responses: “It never scolded me, no matter how weird my question was.” (Student, age 13) However, teachers emphasized that this type of “empathy” cannot replace the authentic teacher-student connection. This observation supports the idea that AI can serve as a supportive tool, but not as a substitute for human relationships in education.

2.5.4 Identified challenges

Among the main challenges reported were:

- Misunderstanding AI responses due to ambiguous phrasing;

- Lack of transparency regarding the source of the information provided;

- A tendency among some students to use AI to avoid cognitive effort.

These findings highlight the importance of fostering critical digital literacy, accompanied by an ethical and reflective approach to AI use in education.

3. Thematic Analysis and Interpretation of Results

Based on a focused thematic analysis of semi-structured interviews conducted with a sample of 57 teachers and 542 lower secondary students, the following key trends were identified regarding the perception and use of AI in educational communication.

One salient theme was that of algorithmic empathy, which emerged strongly among student responses. This concept of “algorithmic empathy” is central to the study’s originality, capturing how simulated, polite responses can produce an affective, motivating experience for learners. While not equivalent to genuine empathy, this type of interaction has tangible educational value. These insights warrant deeper exploration into how algorithmic empathy could be harnessed ethically to support inclusive pedagogical practices. Connecting these findings to the theoretical framework, algorithmic empathy can be interpreted through the lens of socio-constructivist pedagogy, where learning is mediated by dialogue, emotional scaffolding, and relational trust. Although simulated, the empathetic behaviour of AI may serve as a proxy for peer or teacher interaction, reinforcing engagement and reducing anxiety – particularly for students less inclined to participate in traditional formats. This supports the claim by Daugherty and Wilson (2018) that AI should augment, not replace, human educators, and aligns with Istrate et al. (2025), who advocate for emotionally responsive digital pedagogy rooted in human values.

3.1. Key themes identified

Through the coding of participants’ responses, the following central themes were identified:

- Perceived usefulness of AI – Frequently cited by both teachers and students, this theme highlights the appreciation for AI’s clarity, efficiency, and practical utility in educational contexts. AI is viewed as a valuable aid for writing, explanations, and test preparation.

- Ethics and responsibility – The second most common theme reflects concerns about using AI responsibly and with integrity. Participants drew a clear line between leveraging AI as a learning support tool and using it to cheat or avoid intellectual effort.

- Risk of dependence / Academic dishonesty – This theme was especially prevalent among student responses, suggesting that the temptation to rely on AI for quick answers is significant. Teachers expressed concern that this trend could hinder students’ critical thinking development.

- Support for personalized learning – AI is praised for its capacity to adapt explanations to the user’s level of understanding, offering a more personalized and flexible learning environment, especially for students who require additional guidance.

- Empathy – Students commented on the polite and calm tone of AI responses, which made interactions feel emotionally supportive. Even though they understood the empathy was simulated, they still found it comforting and motivating.

- Concerns about teacher replacement – While AI was generally seen as an ally, some teachers expressed valid fears that technology might gradually take over aspects of their instructional roles, particularly in content delivery.

3.2. Overall interpretation

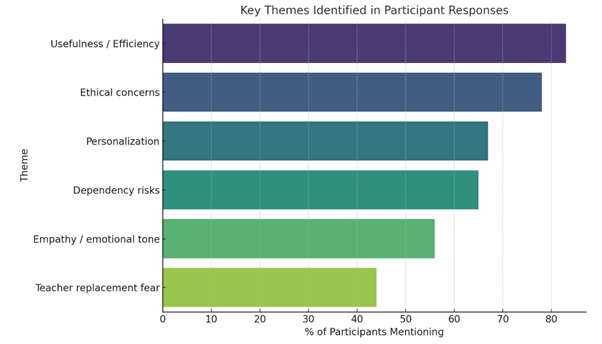

Based on the frequency of thematic references the infographic (Fig. 1) confirms that human-AI interaction is perceived as both valuable and challenging. There is a noticeable tension between usefulness and risk, between support and superficiality, between technology and humanity. These dualities shape a complex educational reality in which AI must be understood not merely as a technological tool, but as an interface for communication, co-creation, and responsibility.

The findings support the idea that integrating AI into education must be accompanied by the development of digital conversational skills, critical literacy, and a redefinition of the teacher-student relationship within the context of emerging technologies. Effective communication between humans and AI is not an end in itself, but a means to reinforce learning, equity, and innovation in the school of the future.

Figure 1

Key Themes in Human-AI Communication

The infographic above illustrates the most frequently mentioned themes from the interviews conducted with students and teachers about human-AI communication in education.

- The perceived usefulness of AI emerges as the most prominent theme, with 83% mentions. This reflects widespread appreciation for AI’s clarity, speed of response, and practicality in tasks such as writing and explaining complex concepts.

- Ethics and responsibility, with 78% mentions, emphasize the importance of conscious and honest AI use, particularly in evaluation contexts and issues related to content originality.

- Risk of dependency and support for personalized learning are equally cited (65% and 67% mentions each), indicating a double-edged potential-AI can support learning, but may also encourage surface-level engagement when misused.

- Empathy (56%), and concerns of teacher replacement (44%) are recurrent concerns, especially among educators, suggesting that while AI is seen as a valuable tool, it cannot replace the relational core of education.

This visual synthesis offers a solid basis for shaping educational strategies focused on enhancing digital communication skills, critically integrating AI into curricula, and preserving the essential role of the teacher in a technology-rich learning environment.

Moreover, while the analysis confirms several positive aspects of AI integration in education, it also reveals a series of interpretative limitations, particularly regarding the contextualization of differences between participant groups. For instance, the study does not systematically distinguish between teachers’ and students’ perceptions, despite the fact that these two categories often articulate contrasting views on the utility, risks, and trustworthiness of AI-based systems. The lack of an explicit comparison diminishes the study’s ability to capture the relational dynamics of human-AI interaction as shaped by the participants’ educational roles. Future research would benefit from a more structured comparative analysis between these groups to highlight the influence of professional context, age, and digital experience on how AI is perceived and utilized in educational settings.

The thematic results are predominantly descriptive, focusing on what participants said rather than providing a critical interpretation of why they said it or how these perceptions relate to broader theoretical constructs. For instance, although empathy and personalization are frequently mentioned, the analysis does not sufficiently connect these themes with established pedagogical models or existing frameworks in digital education. This limitation reduces the explanatory power of the findings and suggests a need for deeper, theory-informed analytical engagement in future studies. For instance, the recurrence of personalization and autonomy in participant discourse could be better understood if linked to self-determination theory (Deci & Ryan, 2000), while concerns about cognitive passivity could be interpreted through the lens of heutagogy (Blaschke, 2012), which emphasizes self-directed learning and learner agency in digital environments. Further studies should encourage reflexive dialogue to better leverage qualitative insights.

4. Discussions

Recent literature underscores the transformative potential and complex challenges of AI integration in education. Holmes, Bialik, and Fadel (2022) emphasize the necessity of rethinking instructional design in light of AI’s capability to support personalized learning and formative feedback. Similarly, Timms (2022) explores the potential of human–AI collaboration to enhance individualized education, but warns of the risk of displacing teacher roles if not ethically framed. Lockery and Gutteridge (2022) discuss the feasibility of scalable, self-paced learning environments mediated by AI, while highlighting the socio-technical barriers to equitable implementation. Zawacki-Richter et al. (2019) identify a gap in the involvement of educators in shaping AI tools, calling for stronger partnerships between developers and pedagogical experts. Tan et al. (2025) provide a comprehensive review of adaptive AI-enabled learning platforms, emphasizing their ability to dynamically respond to learner profiles, support differentiated instruction, and optimize content delivery based on real-time feedback data. These findings support the theoretical position of this article: that effective human-AI interaction in education must be guided by critical, ethical, and learner-centred approaches.

Another dimension only partially explored in this study relates to age and educational level, as well as the evolving role of conversational digital literacy in shaping user experience. Participants ranged from lower to upper secondary school, yet differences in developmental stage, cognitive maturity, and academic expectations were not systematically analysed. in this study relates to age and educational level. Participants ranged from lower to upper secondary school, yet differences in developmental stage, cognitive maturity, and academic expectations were not systematically analysed. Younger students (grades VII–VIII) often anthropomorphized AI systems and expressed higher emotional engagement with conversational agents, describing them as friendly, patient, or even “smart companions.” In contrast, older students and teachers tended to assess AI through a more functional and critical lens, emphasizing aspects such as response accuracy, reliability, and ethical use. These divergences suggest that perceptions and uses of AI vary not only by role (teacher vs. student) but also by age-related psychological and pedagogical factors. Addressing these layers in future studies could enhance both theoretical insight and the design of differentiated AI-supported learning environments.

The integration of AI in education also raises specific curricular implications that remain underexplored. The findings of this study suggest that curricular frameworks must adapt to accommodate not only technological tools but also the development of transversal competencies such as critical thinking, digital literacy, and ethical reasoning. Incorporating AI tools like conversational agents or adaptive platforms necessitates a reconfiguration of instructional objectives to focus on process-oriented learning rather than mere content acquisition. Moreover, curriculum design should explicitly address the risks of overdependence on AI by embedding metacognitive strategies that help students assess when and how to use such tools responsibly. For teachers, this requires sustained professional development and alignment with competence-based curricular standards that reflect the dynamic interplay between human agency and algorithmic assistance. Finally, curricular innovation should be supported by guidelines that ensure inclusivity, accessibility, and ethical sensitivity across different subjects and educational levels.

Digital pedagogy frameworks emphasize that meaningful integration of AI must go beyond access and infrastructure, embedding ethical reflection and inclusive design into instructional practices (Istrate, Velea, & Ceobanu, 2025). These frameworks also emphasize the pedagogical value of metacognition, feedback loops, and differentiated instruction – concepts mirrored in this study’s findings on personalization, affective response, and conversational digital literacy. In particular, the importance of conversational digital literacy – understood as the ability to navigate, evaluate, and engage meaningfully with AI-mediated dialogue – is critical in preparing learners for emerging digital ecosystems. This type of literacy fosters not only technical competence but also critical awareness, enabling students to distinguish between simulated empathy and authentic human interaction, and to develop reflexive strategies for AI collaboration. The student preference for adaptive and dialogic tools aligns with Istrate et al.’s recommendation to restructure curriculum around digitally mediated learning pathways that support autonomy and inclusiveness.

Communication between AI and human intelligence is not merely a technological process, but also a profoundly human one, involving trust, mutual understanding, and adaptability. Beyond the exchange of data, this relationship must evolve into a type of co-creation, where AI not only assists but also learns from interaction with humans.

As AI becomes more sophisticated, human-machine interaction may develop into an authentic, personalized, and adaptive dialogue. For instance, systems based on deep learning can analyse users’ communication styles and adjust the tone, pace, and level of detail in their responses. In education, this could support differentiated learning, while in healthcare, it could mean providing easy-to-understand explanations for complex medical decisions. Despite these developments, the study observed that some interview interpretations remained at a surface level. Citations were frequently used illustratively rather than analytically, indicating a need for deeper thematic engagement. This issue is visible throughout the article, where quotes from both students and teachers are presented largely as narrative reinforcement without being critically dissected or theoretically contextualized. For example, while a student’s comment about AI feeling like a ‘super-smart classmate’ is evocative, it is not unpacked in relation to learner autonomy, agency, or constructivist theory. Similarly, teacher concerns about critical thinking erosion are mentioned but not analysed through pedagogical or psychological lenses. A deeper discourse analysis – situating such citations within broader epistemological or educational frameworks – would have enriched the interpretation and extended the scholarly contribution of the findings. In future studies, integrating a more layered and theory-informed analysis of participant discourse across all sections of the manuscript would enhance both depth and relevance.

A major challenge remains the balance between automation and human control. AI requires autonomy to respond efficiently and in real time, but this should not exclude human input. Another challenge is transparency: clearly communicating how AI makes decisions is essential for building user trust. The lack of explainability can lead to resistance in adoption, especially in sensitive sectors such as justice or medicine. Furthermore, the analysis did not explore potential differences in perception across different age groups or levels of education – an area that remains open for future inquiry.

An effective dialogue between humans and AI can open new spaces for innovative collaboration. Rather than competing, the two types of intelligence may join which AI optimizes processes, and humans contribute ethical, creative, and empathetic dimensions. In the future, we may speak of a new kind of “conversational digital literacy,” where people learn not only to use AI, but also to communicate with it effectively and ethically.

The findings from the literature review highlight several important aspects concerning the communication between artificial intelligence and human intelligence. A key point is that the effectiveness of this interaction largely depends on the quality of AI system design, as well as the level of users’ digital literacy. In other words, technological performance must be accompanied by adequate human preparation in order to maximize the benefits of the interaction.

Another significant aspect is the dynamics of trust: users are more likely to collaborate with an AI system when they perceive it as transparent, consistent, and adaptable. For instance, in the medical field, a patient’s trust in the recommendations of an AI system is directly related to the clarity of its explanations and the transparency of its decision-making history. Thus, communication should not be limited to delivering an outcome but should also include accessible justifications of that outcome.

From an interdisciplinary perspective, differences in expectations emerge across various fields: in education, users seek empathy and personalized feedback, while in business, efficiency and response speed are often prioritized. This diversity underscores the need to develop flexible AI systems that can adapt to context-specific requirements.

Additionally, when it comes to the need for regulation and standardization – particularly in relation to data protection and the ethics of automated decision-making – there are indeed international initiatives in place. However, their applicability often remains uneven and, in some cases, lags behind the rapid pace of technological advancement. This highlights the need for closer collaboration between technology experts, ethicists, legal professionals, and policymakers to shape a coherent and sustainable framework.

Although numerous ethical guidelines for AI have been developed in recent years, Hagendorff (2020) emphasizes that many of them remain vague, inconsistent, or difficult to implement. This lack of normative coherence underscores the urgent need for clear and actionable standards, especially in sensitive domains such as education and healthcare, where the impact of AI directly affects human lives.

Moreover, genuine collaboration between AI and humans requires the development of “conversational intelligence” – not only on the part of machines, but also from human users, who must learn how to engage strategically with these systems to achieve optimal outcomes.

In this context, education becomes a crucial environment for cultivating AI interaction skills. The necessary competencies go beyond the technical use of applications or tools and include the ability to ask relevant questions, critically interpret AI-generated responses, and determine when it is appropriate to rely on automated systems. This process calls for a recalibration of the teacher’s role – one that positions the educator as a facilitator of dialogue between the student and technology, rather than merely a transmitter of knowledge.

Another key point concerns the management of errors and uncertainty in human-AI interaction. Artificial intelligence systems are not infallible and may generate incorrect or inappropriate responses. Educating users to recognize and correct such errors is crucial for maintaining a functional and trustworthy relationship with technology. This necessity gives rise to a new type of literacy – one that is critical and reflective – emphasizing awareness of AI’s limitations as well as its advantages.

The findings also suggest that human-AI interaction varies depending on factors such as age, digital experience, and cognitive style. Younger students tend to anthropomorphize AI more easily, attributing human traits to it, while teachers generally adopt a more rational, function-focused perspective. This contrast can significantly affect the quality of communication and the level of emotional engagement, which in turn influences motivation and receptiveness to learning.

Another issue addressed in the study is the risk of reinforcing cognitive or social biases through AI. When systems are trained on incomplete or biased datasets, they can perpetuate inequalities, stereotypes, or subtle types of discrimination. Effective communication requires more than just technological formatting of responses – it also demands the user’s ability to critically evaluate the content provided. This reinforces the notion that AI should not be used in the absence of clear pedagogical and ethical thinking.

At the same time, a distinction has been observed between passive and active use of AI. Users who merely request “answers” miss out on the systems’ co-creative potential. By contrast, those who use AI to explore ideas, rewrite texts, generate alternatives, or refine their thinking benefit from a more authentic and constructive dialogue. Therefore, fostering an exploratory attitude in human-AI communication is becoming a priority in educational environments.

Moreover, the emotional relationship between the user and the AI influences the level of engagement and the duration of interaction. Participants described experiences in which the AI’s calm and supportive “voice” generated a sense of security, especially during moments of confusion or uncertainty. This phenomenon, known as affective computing, paves the way for developing systems capable of responding not only cognitively but also emotionally, in an adaptive manner.

Furthermore, the findings suggest that effective communication with AI can serve as a tool for educational inclusion. Students with verbal expression difficulties, social anxiety, or learning disabilities may benefit from a non-judgmental, consistent, and accessible interaction that allows them to progress at their own pace. Thus, AI is not only a tool for efficiency but also a potential enabler of equity.

Importantly, this study identifies a significant tension between the utility of AI tools and the growing risk of overdependence – especially among students who use AI to bypass effortful thinking. While this dynamic was acknowledged by both students and teachers, it calls for a more systematic curricular response to mitigate academic dishonesty and cognitive disengagement.

In conclusion, the discussion reflects a complex reality: human-AI communication is an emerging process that must be understood in all its multidimensionality. It is not enough for AI to learn how to respond accurately – humans must also learn how to ask the right questions, interpret critically, and collaborate ethically. In this ecology of interaction, responsibility is shared, and conversational literacy becomes an essential condition for the future of society.

To address these limitations, future work should incorporate a broader curricular analysis and include comparative studies of different AI platforms, as well as institutional practices related to AI integration. Although participants referenced tools such as ChatGPT, Google Bard, or adaptive learning environments, the study does not provide a systematic comparative analysis of their perceived strengths, limitations, or differential impacts on teaching and learning. A structured comparison reveals distinct functional profiles: ChatGPT is praised for its versatility, fluency, and contextual responsiveness in both student and teacher use, yet raises concerns about hallucinations and a lack of verifiable sources. Google Bard, while perceived as more concise and factual, was described as less engaging and less effective in sustaining dialogue. Adaptive platforms (e.g., Knewton, Smart Sparrow) were appreciated for personalized feedback and structured curriculum alignment but criticized for limited interaction and rigid content frameworks. In terms of ethical safeguards, institutional tools embedded in LMSs were deemed more compliant with privacy regulations, while commercial platforms offered greater flexibility at the cost of transparency. Preferences also varied by user profile: students favoured immediacy and tone (ChatGPT), whereas teachers valued content control and traceability (adaptive systems). This analysis suggests that platform choice should be aligned not only with pedagogical goals but also with user expectations and institutional values. A comparative matrix including usability, trust, personalization, and ethical compliance would guide more informed, context-specific decisions. This represents an opportunity for future research to guide evidence-informed decision-making regarding platform adoption in education.

5. Conclusions and Future Research Directions

Communication between artificial intelligence (AI) and human intelligence is a dynamic field, rich with both challenges and opportunities. The development of more intuitive and ethical AI systems has the potential to revolutionize human-machine interaction, generating significant benefits across multiple domains. However, it is essential that this process be guided by clear principles of ethics and responsibility. As technology continues to advance, it is imperative to ensure that AI remains a tool designed to support, rather than replace, human intelligence. In doing so, a harmonious collaboration between humans and AI can pave the way toward a more innovative and equitable society.

Effective communication between AI and human intelligence is crucial for harnessing the full potential of technology in a sustainable and fair manner. Beyond tools and algorithms, this interaction marks a paradigm shift in how we relate to technology. The relationship is no longer one of passive use but of active collaboration, where AI becomes a partner in learning, decision-making, and creativity.

It is vital that AI be developed within a well-defined ethical framework that safeguards fundamental human values such as privacy, fairness, and autonomy. Equally important is the education and training of users – whether students, professionals, or everyday citizens-as they play a critical role in fostering a healthy relationship with technology.

The future lies not in a competition between human and machine, but in a collaboration where both types of intelligence complement one another. Thus, communication between AI and human intelligence can become not only efficient but also meaningful-contributing to a society in which technology serves as a catalyst for human progress, not a replacement for it.

In this context, designing human-centred AI becomes a strategic imperative. AI systems must be built not only for efficiency but also for understanding, empathy, and social responsibility. Human-AI communication should reflect not only technological competence but also the values of the communities that use it. Achieving this vision requires interdisciplinary collaboration among educators, engineers, psychologists, and philosophers throughout the development process.

In the long run, the success of integrating AI into society will depend on our ability to cultivate informed and conscious citizens – individuals who can engage critically and constructively with technology. Education thus plays a crucial role in preparing a generation that not only understands how AI works but also recognizes why the way we communicate with it matters. Only through such an approach can we turn technological potential into meaningful human progress.

Tegmark (2017) envisions a bold future in which AI becomes an integral part of “Life 3.0” – an evolutionary stage where intelligence can reshape not only culture but also the biological structure of the human species. In such a scenario, effective and ethical communication between humans and AI takes on a vital role in ensuring progress that is aligned with human values.

The interaction between artificial and human intelligence is an emerging field with deep pedagogical, social, and ethical implications. This study has demonstrated that this interaction is not a mere technological process, but a complex relationship shaped by factors such as digital literacy, trust, empathy, and the adaptability of both human and machine agents.

With this in mind, several future research directions are proposed:

- Expanding the research sample to include high school and university-level education, to capture variations in perception based on age and educational level;

- Conducting longitudinal studies to track how human – AI interaction evolves over time and how it affects academic performance and motivation;

- Examining the impact of algorithmic empathy in both educational and therapeutic contexts;

- Exploring AI-mediated human-to-human communication, to better understand how AI influences interactions between students or between teachers and parents;

- Designing AI-enhanced pedagogical models that leverage the adaptive capacity of intelligent systems without undermining student autonomy or the formative role of educators.

In a world undergoing rapid digital transformation, the integration of artificial intelligence must be accompanied by critical thinking, interdisciplinary dialogue, and a human-centred educational vision. Only by following this path can we ensure a balanced and sustainable relationship between the two types of intelligence – human and artificial.

References

Blaschke, L. M. (2012). Heutagogy and lifelong learning: A review of heutagogical practice and self-determined learning. The International Review of Research in Open and Distributed Learning, 13(1), 56–71. https://doi.org/10.19173/irrodl.v13i1.1076

Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Minds & Machines. Oxford University Press. https://doi.org/10.1007/s11023-015-9377-7

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77-101. https://doi.org/10.1191/1478088706qp063oa

Daugherty, P. R., Wilson, H. J. (2018). Human + Machine: Reimagining Work in the Age of AI. Harvard Business Review Press.

Dignum, V. (2019). Responsible Artificial Intelligence: How to Develop and Use AI in a Responsible Way. Springer.

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., et al. (2018). AI4People – An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds and Machines, 28(4), 689-707.

Hagendorff, T. (2020). The Ethics of AI Ethics: An Evaluation of Guidelines. Minds & Machines 30, 99-120, https://doi.org/10.1007/s11023-020-09517-8

Holmes, W., Bialik, M., & Fadel, C. (2022). Artificial intelligence in education: Promises and implications for teaching and learning. Center for Curriculum Redesign.

Istrate, O., Velea, S., & Ceobanu, C. (2025). Pedagogie digitală. Iași: Editura Polirom.

Lockery, M., & Gutteridge, N. (2022). Artificial Intelligence and the prospects for education: Can AI make personalized, self-paced learning a reality? Journal of Educational Technology & Society, 25(1), 11-26.

Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2016). Intelligence Unleashed: An argument for AI in education. Pearson Education.

Russell, S., Norvig, P. (2021). Artificial Intelligence: A Modern Approach. Pearson. http://repo.darmajaya.ac.id/4836/1/Stuart%20Russell%2C%20Peter%20Norvig-Artificial%20Intelligence_%20A%20Modern%20Approach-Prentice%20Hall%20%28%20PDFDrive%20%29.pdf

Ryan, R. M., & Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55(1), 68–78. https://doi.org/10.1037/0003-066X.55.1.68

Selwyn, N. (2019). Should Robots Replace Teachers? AI and the Future of Education. Polity Press.

Tan, L. Y., Hu, S., Yeo, D. J., & Cheong, K. H. (2025). Artificial intelligence-enabled adaptive learning platforms: A review. Computers and Education: Artificial Intelligence, 6, 100429. https://doi.org/10.1016/j.caeai.2025.100429

Tegmark, M. (2017). Life 3.0: Being Human in the Age of Artificial Intelligence. Alfred A. Knopf. https://archive.org/details/life30beinghuman0000tegm/mode/2up

Timms, M. J. (2022). Human-AI collaboration for personalised education. International Journal of Information and Learning Technology. https://doi.org/10.1108/IJILT-06-2021-0113

Van den Berghe, R., Vasalou, A., Zaphiris, P., Pitt, J., & Pouloudi, A. (2021). The teacher in AI: Exploring educators’ perceptions of ethical issues in AI-supported learning environments. Learning, Media and Technology, 46(1), 80-94.

Zawacki-Richter, O., Marín, V.I., Bond, M. et al. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators?. Int J Educ Technol High Educ 16, 39. https://doi.org/10.1186/s41239-019-0171-0

____________________

Author:

Cristina-Georgiana Voicu

Titu Maiorescu Secondary School, Iași, Romania

voicucristina2004@yahoo.fr

![]() https://orcid.org/0000-0001-9299-6551

https://orcid.org/0000-0001-9299-6551

Received: 27.05.2025. Accepted and published: 25.07.2025

© Cristina-Georgiana Voicu, 2025. This open access article is distributed under the terms of the Creative Commons Attribution Licence CC BY, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited:

Citation:

Voicu, C.-G. (2025). Human-AI Interaction in Romanian Schools: Explorations of Algorithmic Empathy and Digital Co-Creation. Journal of Digital Pedagogy, 4(1) 56-69. Bucharest: Institute for Education. https://doi.org/10.61071/JDP.2540